For me it’s cheating

Remind yourself that, in the long term, they are cheating themselves. Shifting the burden of thinking to AI means that these students will be unlikely to learn to think about these problems for themselves. Learning is a skill, problem solving is a skill, hell, thinking is a skill. If you don’t practice a skill, you don’t improve, full stop.

When/if these students graduate, if their most practiced skill is prompting an AI then I’d say they’re putting a hard ceiling on their future potential. How are they going to differentiate themselves from all the other job seekers? Prompting an AI is stupid easy, practically anyone can do that. Where is their added value gonna come from? What happens if they don’t have access to AI? Do they think AI is always going to be cheap/free? Do they think these companies are burning mountains of cash to give away the service forever?? When enshittification inevitably comes for the AI platforms, there will be entire cohorts filled with panic and regret.

My advice would be to keep taking the road less traveled. Yes it’s harder, yes it’s more frustrating, but ultimately I believe you’ll be rewarded for it.

My partner wrote EVERYTHING with ChatGPT. I kept having the same discussion with him over and over: Write the damn thing yourself. Don’t trust ChatGPT. In the end, we’ll need citations anyway, so it’s faster to write it yourself and insert the citation than to retroactively figure them out for a chapter ChatGPT wrote. He didn’t listen to me, had barely any citation in his part. I wrote my part myself. I got a good grade, he said he got one, too.

Don’t worry about it! The point of education is not grades, it’s skills and personal development. I have a 25 year career in IT, you know what my university grades mean now? Literally nothing! You know what the thinking skills I acquired mean now? Absolutely everything.

I switched to AirVPN about 6 months ago and I’ve been really happy with the service. Was previously using NordVPN, which was fine, but I was looking for a VPN provider that offered port forwarding and AirVPN does that. I don’t have hard stats on this, but I do feel that having access to port forwarding has improved my overall torrent speeds since switching.

Here’s the exact post that got the Proton CEO in trouble:

Maybe Gail Slater really is a great pick for Assistant Attorney General for the Antitrust Division. Frankly, I have no idea. But I won’t do business with any company that carries any water whatsoever for Trump.

I’d recommend AirVPN. Here’s why I’d recommend them, in their own words:

No traffic limit. No time limit.

No maximum speed limit, it depends only on the server load

Every protocol is welcome, including p2p. Forwarded ports and DDNS to optimize your software.

Not the person you replied to, but I’m in agreement with them. I did tech hiring for some years for junior roles, and it was quite common to see applicants with a complete alphabet soup of certifications. More often than not, these cert-heavy applicants would show a complete lack of ability to apply that knowledge. For example they might have a network cert of some kind, yet were unable to competently answer a basic hypothetical like “what steps would you take to diagnose a network connection issue?” I suspect a lot of these applicants crammed for their many certifications, memorized known answers to typical questions, but never actually made any effort to put the knowledge to work. There’s nothing inherently wrong with certifications, but from past experience I’m always wary when I see a CV that’s heavy on certs but light on experience (which could be work experience or school or personal projects).

That’s just what happens to CEOs of publicly traded companies when they have a bad year. And Intel had a really bad year in 2024. I’m certainly hoping that their GPUs become serious competition for AMD and Nvidia, because consumers win when there’s robust competition. I don’t think Pat’s ousting had anything to do with GPUs though. The vast majority of Intel’s revenue comes from CPU sales and the news there was mostly bad in 2024. The Arrow Lake launch was mostly a flop, there were all sorts of revelations about overvolting and corrosion issues in Raptor Lake (13th and 14th gen Intel Core) CPUs, broadly speaking Intel is getting spanked by AMD in the enthusiast market and AMD has also just recently taken the lead in datacenter CPU sales. Intel maintains a strong lead in corporate desktop and laptop sales, but the overall trend for their CPU business is quite negative.

One of Intel’s historical strength was their vertical integration, they designed and manufactured the CPUs. However Intel lost the tech lead to TSMC quite a while ago. One of Pat’s big early announcements was “IDM 2.0” (“Integrated Device Manufacturing 2.0”), which was supposed to address those problems and beef up Intel’s ability to keep pace with TSMC. It suffered a lot of delays, and Intel had to outsource all Arrow Lake manufacturing to TSMC in an effort to keep pace with AMD. I’d argue that’s the main reason Pat got turfed. He took a big swing to get Intel’s integrated design and manufacturing strategy back on track, and for the most part did not succeed.

Being a private company has allowed Valve to take some really big swings. Steam Deck is paying off handsomely, but it came after the relative failure of the Steam Controller, Steam Link and Steam Machines. With their software business stable, they can allow themselves to take big risks on the hardware side, learn what does and doesn’t work, then try again. At a publically traded company, CEO Gabe Newell probably gets forced out long before they get to the Steam Deck.

However, it’s worth mentioning that WireGuard is UDP only.

That’s a very good point, which I completely overlooked.

If you want something that “just works” under all conditions, then you’re looking at OpenVPN. Bonus, if you want to marginally improve the chance that everything just works, even in the most restrictive places (like hotel wifi), have your VPN used port 443 for TCP and 53 for UDP. These are the most heavily used ports for web and DNS. Meaning you VPN traffic will just “blend in” with normal internet noise (disclaimer: yes, deep packet inspection exists, but rustic hotel wifi’s aren’t going to be using it ;)

Also good advice. In my case the VPN runs on my home server, there are no UDP restrictions of any kind on my home network and WireGuard is great in that scenario. For a mobile VPN solution where the network is not under your control and could be locked down in any number of ways, you’re definitely right that OpenVPN will be much more reliable when configured as you suggest.

I use WireGuard personally. OpenVPN has been around a long time, and is very configurable. That can be a benefit if you need some specific configuration, but it can also mean more opportunities to configure your connection in a less-secure way (e.g. selecting on older, less strong encryption algorithm). WireGuard is much newer and supports fewer options. For example it only does one encryption algorithm, but it’s one of the latest and most secure. WireGuard also tends to have faster transfer speeds, I believe because many of OpenVPN’s design choices were made long ago. Those design choices made sense for the processors available at the time, but simply aren’t as performant on modern multi core CPUs. WireGuard’s more recent design does a better job of taking advantage of modern processors so it tends to win speed benchmarks by a significant margin. That’s the primary reason I went with WireGuard.

In terms of vulnerabilities, it’s tough to say which is better. OpenVPN has the longer track record of course, but its code base is an order of magnitude larger than WireGuard’s. More eyes have been looking at OpenVPN’s code for more time, but there’s more than 10x more OpenVPN code to look at. My personal feeling is that a leaner codebase is generally better for security, simply because there’s fewer lines of code in which vulnerabilities can lurk.

If you do opt for OpenVPN, I believe UDP is generally better for performance. TCP support is mainly there for scenarios where UDP is blocked, or on dodgy connections where TCP’s more proactive handling of dropped packets can reduce the time before a lost packet gets retransmitted.

The next tech bubble, IMO.

Beware of reverse survivorship bias. We’d know relatively little about the smart deviants if they rarely get caught.

No, the opposite actually! Any amount of exercise will help with GI motility, but intense exercise causes the digestive system to slow down (source).

None of the above. The correct answer is walking. Moving around helps kickstart the GI tract.

Chaotic neutral. Vertical monitor is best code review monitor.

I don’t believe it actually does grow back faster, it just seems that way. The first inch or so gives the impression of growing back faster because the hairs are thicker at the base so they’re more visible and less prone to breakage.

Is there any benefit at all

Maybe! There’s at least some scientific evidence that chemical compounds in mushrooms can have medicinal effects.

Bias disclaimer: I put a lion’s mane mushroom tincture in my morning tea because it may have a neuroprotective effect (source). My father’s father had dementia, my father is currently in a home with profound dementia, the chances it’s going to happen to me are very high. It’ll be years before I know whether lion’s mane mushroom will do anything for me (and even then you couldn’t claim anything from one data point), but I’m willing to try anything as long as it’s affordable and has at least some plausible evidence behind it. This isn’t the only thing I’m doing of course, I’ve also overhauled my diet (MIND diet) and lost 30 pounds (obesity is correlated with dementia).

why can’t you make it your self by pulverizing dried mushrooms of the same variety they use into powder and making the coffee yourself?

You absolutely could. Or, you know, just eat some of the same mushrooms. The benefit to dried products like Ryze, or tinctures like the one I use, are that they’re convenient, easily transportable and self-stable. I’ve cooked up fresh lion’s mane mushrooms several times, but not super often because they’re not in many stores in my area and tend to be pricey for the amount you get. I’ve also grown my own from a kit but that takes significant time and a little bit of daily attention to maintain optimal growing conditions. The tincture is convenient and relatively affordable as far as daily supplements go.

Tl;Dr the protocol requires there to be trusted token providers that issue the tokens. Who do you suppose are the trusted providers in the Google and Apple implementations? Google and Apple respectively, of course. Maybe eventually there would be some other large incumbents that these implementers choose to bless with token granting right. By its nature the protocol centralizes power on the web, which would disadvantage startups and smaller players.

Private State Tokens are Google’s implementation of the IETF Privacy Pass protocol. Apple has another implementation of the same protocol named Private Access Tokens. Mozilla has taken a negative position against this protocol in its current form, and its existing implementations in their current forms. See here for their blog post on the subject, and here for their more in-depth analysis.

I think you’re referring to FlareSolverr. If so, I’m not aware of a direct replacement.

Main issue is it’s heavy on resources (I have an rpi4b)

FlareSolverr does add some memory overhead, but otherwise it’s fairly lightweight. On my system FlareSolverr has been up for 8 days and is using ~300MB:

NAME CPU % MEM USAGE

flaresolverr 0.01% 310.3MiB

Note that any CPU usage introduced by FlareSolverr is unavoidable because that’s how CloudFlare protection works. CloudFlare creates a workload in the client browser that should be trivial if you’re making a single request, but brings your system to a crawl if you’re trying to send many requests, e.g. DDOSing or scraping. You need to execute that browser-based work somewhere to get past those CloudFlare checks.

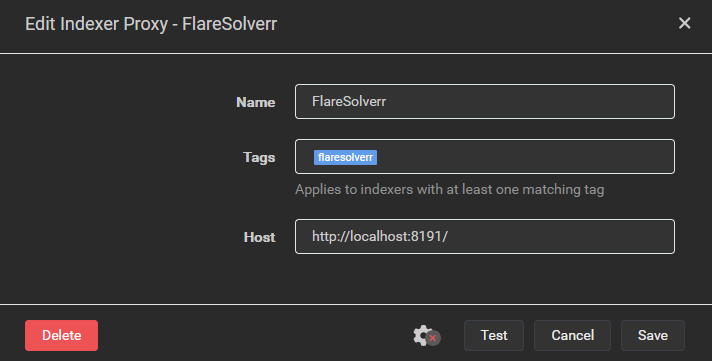

If hosting the FlareSolverr container on your rpi4b would put it under memory or CPU pressure, you could run the docker container on a different system. When setting up Flaresolverr in Prowlarr you create an indexer proxy with a tag. Any indexer with that tag sends their requests through the proxy instead of sending them directly to the tracker site. When Flaresolverr is running in a local Docker container the address for the proxy is localhost, e.g.:

If you run Flaresolverr’s Docker container on another system that’s accessible to your rpi4b, you could create an indexer proxy whose Host is “http://<other_system_IP>:8191”. Keep security in mind when doing this, if you’ve got a VPN connection on your rpi4b with split tunneling enabled (i.e. connections to local network resources are allowed when the tunnel is up) then this setup would allow requests to these indexers to escape the VPN tunnel.

On a side note, I’d strongly recommend trying out a Docker-based setup. Aside from Flaresolverr, I ran my servarr setup without containers for years and that was fine, but moving over to Docker made the configuration a lot easier. Before Docker I had a complex set of firewall rules to allow traffic to my local network and my VPN server, but drop any other traffic that wasn’t using the VPN tunnel. All the firewall complexity has now been replaced with a gluetun container, which is much easier to manage and probably more secure. You don’t have to switch to Docker-based all in go, you can run hybrid if need be.

If you really don’t want to use Docker then you could attempt to install from source on the rpi4b. Be advised that you’re absolutely going offroad if you do this as it’s not officially supported by the FlareSolverr devs. It requires install an ARM-based Chromium browser, then setting some environment variables so that FlareSolverr uses that browser instead of trying to download its own. Exact steps are documented in this GitHub comment. I haven’t tested these steps, so YMMV. Honestly, I think this is a bad idea because the full browser will almost certainly require more memory. The browser included in the FlareSolverr container is stripped down to the bare minimum required to pass the CloudFlare checks.

If you’re just strongly opposed to Docker for whatever reason then I think your best bet would be to combine the two approaches above. Host the FlareSolverr proxy on an x86-based system so you can install from source using the officially supported steps.